Introduction

In the intricate dance of data management, the question “To Mask, or Not to Mask” echoes the timeless contemplation of “to be, or not to be,” casting a spotlight on the pivotal role of data in software testing and Machine Learning (ML) model training. This article delves into the nuanced world of real and synthetic data, exploring how they shape the landscape of data-driven decision-making in technology. As we navigate through the complexities of data privacy and efficiency, the balance between masked real data and fabricated synthetic data emerges as a cornerstone in the pursuit of innovative and responsible software development.

Understanding Masked Real Data

Masked real data refers to the process of disguising sensitive elements within authentic datasets to preserve privacy while maintaining a semblance of reality. This technique is crucial in scenarios where real data is accessible but contains sensitive information such as Personally Identifiable Information (PII). By masking these elements, the data retains its integrity and relevance for testing purposes, ensuring realistic outcomes without compromising confidentiality. The benefits of this approach are manifold – it offers a high level of validity and practicality in test scenarios. However, the complexity of masking procedures and the inherent limitations imposed by the original data’s structure and variety pose significant challenges, requiring a delicate balance to achieve optimal testing environments.

The Role of Synthetic Data

Synthetic data, in contrast to masked real data, is entirely fabricated, designed to mimic the characteristics of real datasets without using actual sensitive information. Generated through advanced methods like Artificial Intelligence (AI) or defined business rules, synthetic data is a powerful tool when real data is unavailable, inadequate, or non-compliant with privacy regulations. Its primary advantage lies in its flexibility and control, allowing testers to model a wide range of scenarios that might not be possible with real data. However, creating high-quality synthetic data requires a deep understanding of the underlying data patterns and can be resource-intensive.

Comparative Analysis

Choosing between masked real data and synthetic data is not a one-size-fits-all decision but rather a strategic consideration based on the specific needs of each testing scenario. Masked real data offers authenticity and practical relevance, making it ideal for scenarios where the testing environment needs to mirror real-world conditions closely. Synthetic data, however, provides an invaluable alternative for exploratory testing, stress testing, or when compliance and privacy concerns restrict the use of real data. Each method has its unique strengths and weaknesses, and their effective use often depends on the nature of the software being tested, the specific testing requirements, and the available resources.

Tools for Data Management

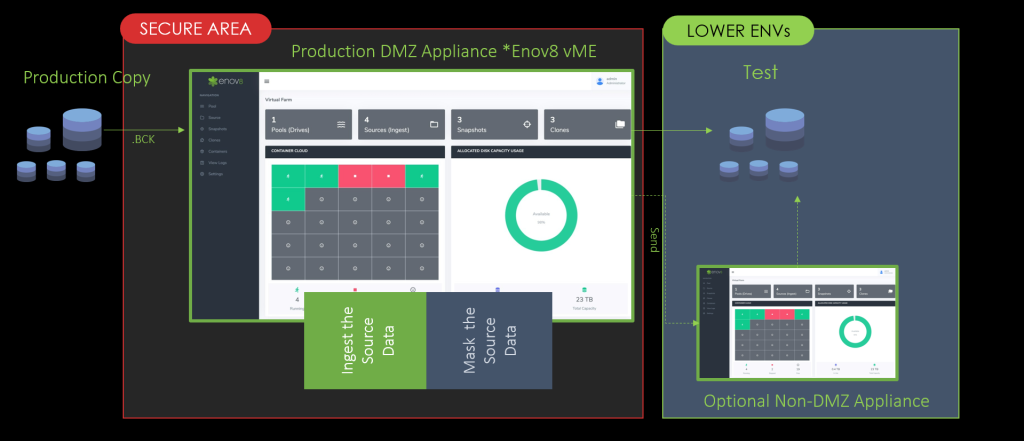

In the competitive landscape of data management, Enov8, Delphix, and Broadcom TDM each offer distinct capabilities. Enov8 specializes in holistic test data management and database virtualization. Delphix is known for its agile approach to data management and efficient data masking. Meanwhile, Broadcom TDM excels in automated generation and management of synthetic test data. Each tool provides unique solutions, catering to different aspects of data management for privacy, compliance, and varied testing scenarios

Strategic Decision-Making in Data Utilization

The decision to use masked real data or synthetic data, in management of test data, is contingent on several factors. These include the specific testing requirements, the availability and nature of production data, compliance with data privacy laws, and the organization’s resource capabilities. For instance, when testing for data privacy compliance or customer-centric scenarios, masked real data might be more appropriate. Conversely, for stress testing or when dealing with highly sensitive data, synthetic data might be the safer, more compliant choice. The key is in understanding the strengths and limitations of each method and strategically applying them to meet the diverse and evolving needs of software testing and ML model training.

Future Trends and Innovations

As we look to the future, the field of data management is poised for significant innovations, particularly in the realms of data masking and synthetic data generation. Advancements in AI and machine learning are expected to make synthetic data even more realistic and easier to generate, while new techniques in data masking could offer greater flexibility and efficiency. The growing emphasis on data privacy and the increasing complexity of data environments will likely drive these innovations. As these technologies evolve, they will provide more nuanced and sophisticated tools for software testers and ML practitioners, further blurring the lines between real and synthetic data.

Conclusion

In conclusion, the decision to use masked real data or synthetic data in software testing and ML model training is a strategic one, reflecting the evolving complexities of data management. Enov8, Delphix, and Broadcom TDM, each with their unique capabilities, provide a range of options in this arena. The choice hinges on specific requirements for privacy, compliance, and testing scenarios, highlighting the importance of a nuanced approach to data handling in today’s technology landscape. As data management tools continue to evolve, they will play a pivotal role in shaping efficient and responsible software development practices.